Emesent takes part in Navy’s Autonomous Warrior 2020

Search & Rescue Mission Accomplished

Emesent takes part in Navy’s Autonomous Warrior 2020

Issued with a mission to map a hazardous area and identify survivors as part of Autonomous Warrior 2020, Emesent deployed a fleet of autonomous air and ground robots to assess the hypothetical situation and detect survivors.

Even though the Royal Australian Navy is over a century old they are pushing boundaries, constantly looking for better and innovative ways to keep Australians safe. To facilitate this, they hold an event known as Autonomous Warrior, where they showcase robotic, autonomous systems and artificial intelligence from joint and allied services, industry, and academia to Navy brass to encourage their adoption.

This year it was the turn of Emesent, CSIRO’s Data61, and a number of other vendors to show how their technology can support future requirements for disaster recovery. To do this we were presented with a search and rescue scenario following a disaster in a hostile environment.

Mission Scenario

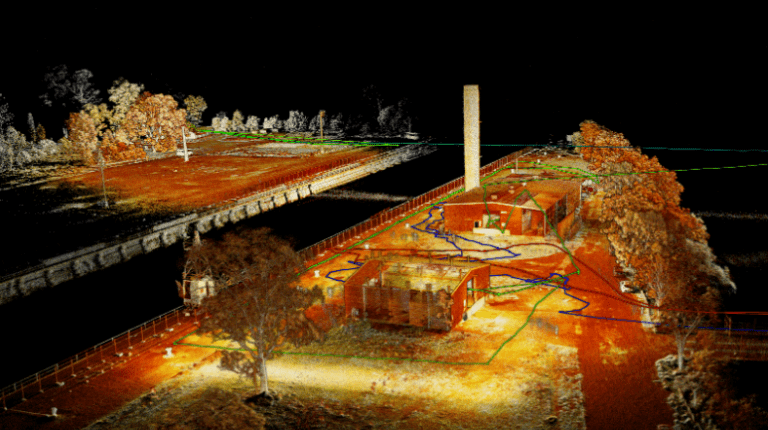

The hypothetical situation presented was based at docklands, on the banks of the Brisbane river. An explosion had caused buildings to collapse, trapping and injuring an unknown number of the 45 people on site. It had also blocked the shore-side entrances, leaving only the river into a dry dock, or from a possibly damaged pontoon as access points. The mission for Emesent and Data61 was to aid the rescue team. This required providing a map of the area identifying potential hazards, the location and condition of survivors, and providing real-time situational data for assisting their extraction.

The Solution

Emesent and Data61 rose to this challenge with an autonomous fleet of robots, three airborne and two ground-based, that worked together to explore and map the environment.

Emesent deployed three Hovermap-enabled drones, two of which were launched from the back of Data61’s Titan autonomous unmanned ground vehicle (UGV). Hovermap provided the autonomy and 3D mapping capabilities for the drones.

Two drone and UGV pairs were deployed to explore and map the disaster site and search for survivors.

All five robots were controlled with high-level commands from a single operator. To keep the operator safe at their post, the Forward Operating Base and Command Post (FOB CP), was situated in a remote location.

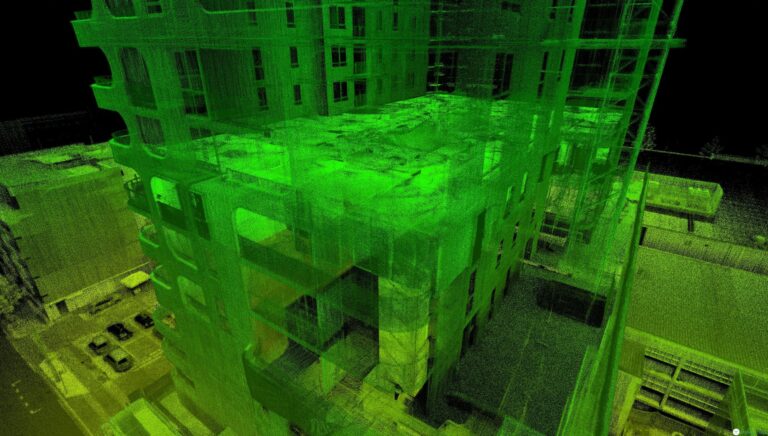

To undertake this mission, each robot was equipped with SLAM-based LiDAR mapping, an autonomy payload, and a camera system trained to detect people and other features. Our advanced multi-agent autonomy allowed the robots to independently and collaboratively navigate, explore, map, and search the hazardous areas. This included GPS-denied environments, such as inside buildings and under forest canopy.

During the mission, the robots shared their individual 3D maps in real-time over a mesh network. This allowed each robot to build a unified, global, and geo-referenced map, shared by all the robots and streamed back to the FOB CP. The mesh network was deployed by the fleet, with each robot acting as a node, and the ground robots deploying additional nodes as needed to maintain connectivity.

The location of relevant items detected during the exploration were accurately reported by the robots within the 3D map coordinates.

PHASE I – RECON

The FOB CP was established at a safe location and allowed a single operator to control all five robots with high-level commands.

Phase I of the mission required assessing the situation and identifying safe areas for the remote FOB CP.

To do this, a Hovermap mounted on a DJI M300 drone was launched to map and inspect the area from above, streaming a low resolution 3D map, high resolution visual and thermal video back to the recovery team. This information allowed them to select a safe area and establish the FOB CP.

PHASE II – SEARCH & DETECTION

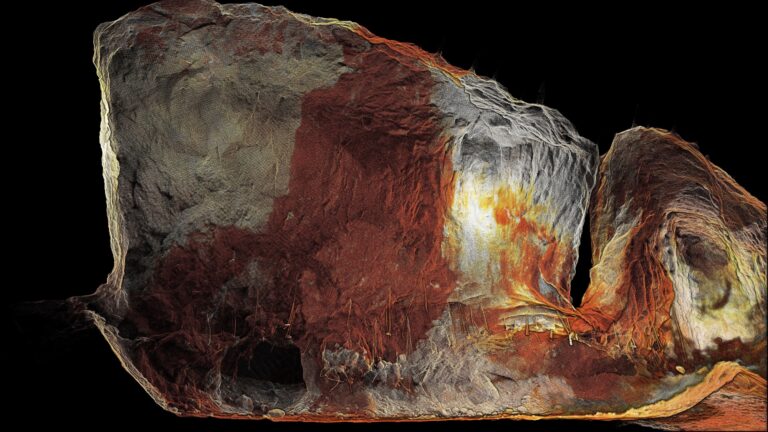

Mapping and exploration were conducted inside buildings and under tree canopy, thanks to the GPS-denied flight and collision avoidance capabilities of Hovermap.

Once the FOB CP was set up, the two drone-carrying Titan UGVs were deployed from it to explore the explosion site. The drone and UGV pair traveled towards the rescue site before the drone, fitted with a Hovermap, was autonomously launched from the UGV. The UGV explored from the ground while the drone explored from above.

Thanks to Hovermap’s GPS-denied flight and collision avoidance capabilities, the drone was even able to fly inside the buildings to search for survivors. For this phase, high-level commands were sent to the robots with an operator providing tasks in the 3D map.

The second UxV (unmanned vehicle) pair were then sent to investigate and map a different area with geo-fence restrictions. Once the drone had searched and mapped this area it departed to map a nearby forest area, including below the tree canopy and in between small shrubs. The robots continued the exploration and search until they completed their mission or their battery levels were running low. The drones and UGVs then automatically returned to the FOB CP.

The 3D maps and survivor detection data was streamed in real-time from all four robots to the FOB CP. Here it was merged into one map and presented to the operator and rescue team.

PHASE III – THE RESCUE

Survivors identified and their images captured by the drone-mounted Hovermap.

The results of these missions provided the response team with an accurate map of the area, both in-door and under-canopy. It also identified survivors and provided their location in the 3D map, as well as images, which allowed the team to determine their condition.

From this information, the team decided to deliver communications and first aid kits to one group of survivors that were in a critical condition. This was carried out by Freespace Operations using a slung load system and heavy-lift drone. The delivery drone was directed by waypoint navigation from Google maps.

A Navy rescue team was then deployed to extract the survivors and take them to safety.

THE RESULTS

The merged 3D map was streamed back to the FOB CP, providing an overview of the area and hazards.

This successful mission follows on from the DARPA Subterranean Challenge, where Emesent and Data61 worked together conducting multi-agent autonomy and mapping, and advanced to the finals in 2021.

The potential value that Emesent’s Hovermap offers in defense, security, and crisis management also led to a recent investment in Emesent by In-Q-Tel.

This mission enabled Emesent and Data61 to test out the abilities of their ground-breaking advanced multi-agent autonomy systems and demonstrate that:

- A robot fleet can be used in mission planning and mission execution, reducing risks for humans.

- It is possible for multiple UxVs to explore and collaboratively execute a complex mission.

- Working in GPS-denied environments is possible with our advanced autonomy and operation.

- Real-time situational awareness can be streamed to the ground control station or FOB CP.

- Multiple, heterogeneous UxVs can be controlled from a ground control station or FOB CP, by one human operator.

- A drone can autonomously be launched from an autonomous UGV.

- A self-healing mesh network can be established with robot-deployed comms nodes.

- A real-time 3D map can be created and shared by a multi-agent fleet.

- Survivors can automatically be detected, classified, and localized by the robot fleet.

The accomplishment at Autonomous Warrior 2020 cements Hovermap’s position as a powerful tool for the growing incident and emergency response market and paves the way for further potential use cases in the defense industry.